Direct Reason

Let $BT$ be a boosted tree composed of {$T_1,\ldots T_n$} regression trees and $x$ an instance, the direct reason for $x$ is the term of the binary representation corresponding to the conjunction of the terms corresponding to the root-to-leaf paths of all $T_i$ that are compatible with $x$. Due to its simplicity, it is one of the easiest abductive explanation for $x$ to compute, but it can be highly redundant. More information about the direct reason can be found in the article Computing Abductive Explanations for Boosted Trees.

| <Explainer Object>.direct_reason(): |

|---|

Returns the direct reason for the current instance. Returns None if this reason contains some excluded features. All kinds of operators in the conditions are supported. This reason is in the form of binary variables, you must use the to_features method if you want to obtain a representation based on the features considered at start. |

The basic methods (initialize, set_instance, to_features, is_reason, …) of the explainer module used in the next examples are described in the Explainer Principles page.

Example from Hand-Crafted Trees

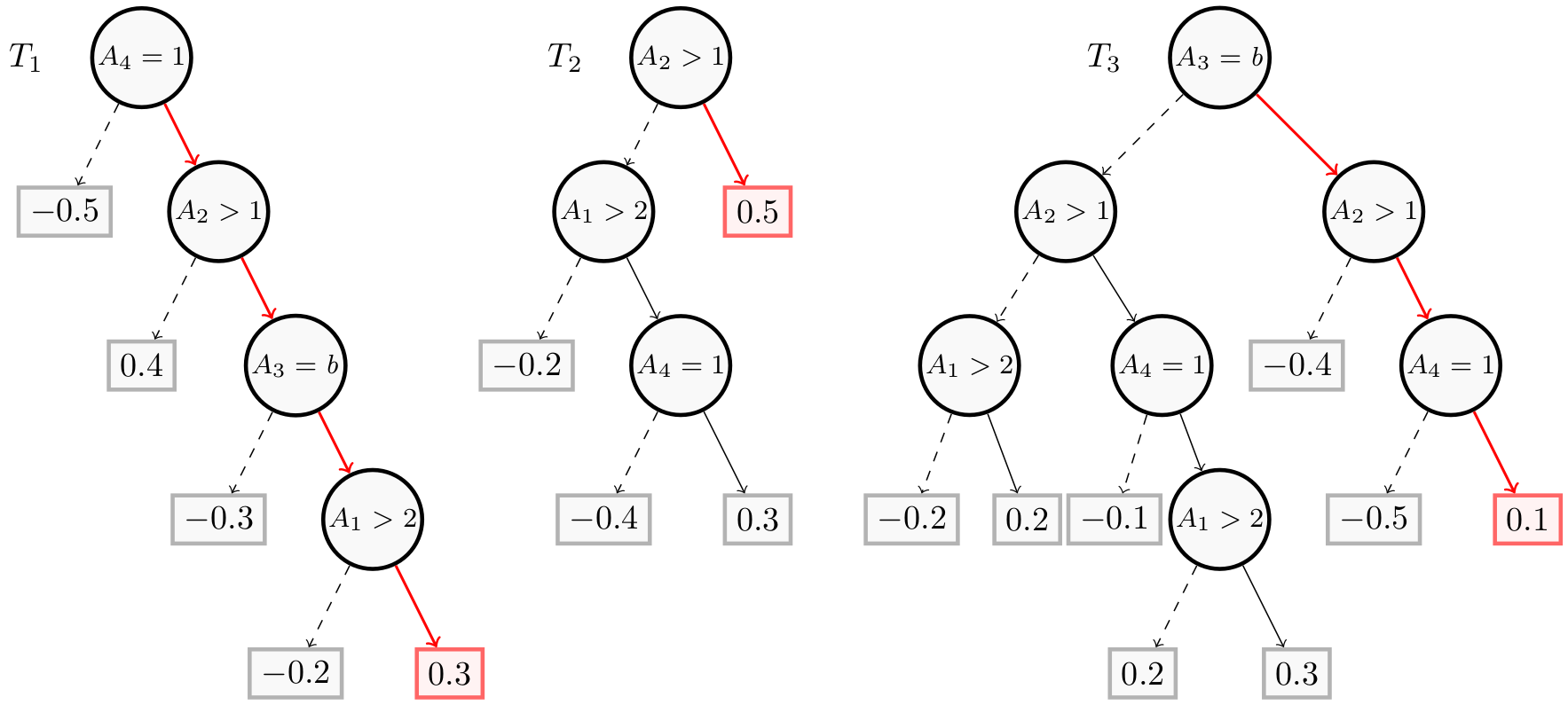

For this example, we take an example of binary classification from the Building Models page. This figure represents a boosted tree $BT$ using $4$ features ($A_1$, $A_2$, $A_3$ and $A_4$), where $A_1$ and $A_2$ are numerical, $A_3$ is categorical and $A_4$ is Boolean. The direct reason for the instance $x$ = ($A_1=4$, $A_2 = 3$, $A_3 = 1$, $A_4 = 1$) is in red. This reason contains all features of the instance.

We have $w(T_1, x)=0.3$, $w(T_2, x)=0.5$ and $w(T_3, x)=0.1$. So $W(F, x) = 0.9$. As we are in the case of binary classification and $W(F, x) > 0$, $x$ is classified as a positive instance ($BT(x) = 1$).

We consider that the features $A_3$ and $A_4$ are numerical. Categorical and Boolean features will be implemented in future versions of PyXAI.

We now show how to get direct reasons using PyXAI:

from pyxai import Builder, Explainer

node1_1 = Builder.DecisionNode(1, operator=Builder.GT, threshold=2, left=-0.2, right=0.3)

node1_2 = Builder.DecisionNode(3, operator=Builder.EQ, threshold=1, left=-0.3, right=node1_1)

node1_3 = Builder.DecisionNode(2, operator=Builder.GT, threshold=1, left=0.4, right=node1_2)

node1_4 = Builder.DecisionNode(4, operator=Builder.EQ, threshold=1, left=-0.5, right=node1_3)

tree1 = Builder.DecisionTree(4, node1_4)

node2_1 = Builder.DecisionNode(4, operator=Builder.EQ, threshold=1, left=-0.4, right=0.3)

node2_2 = Builder.DecisionNode(1, operator=Builder.GT, threshold=2, left=-0.2, right=node2_1)

node2_3 = Builder.DecisionNode(2, operator=Builder.GT, threshold=1, left=node2_2, right=0.5)

tree2 = Builder.DecisionTree(4, node2_3)

node3_1 = Builder.DecisionNode(1, operator=Builder.GT, threshold=2, left=0.2, right=0.3)

node3_2_1 = Builder.DecisionNode(1, operator=Builder.GT, threshold=2, left=-0.2, right=0.2)

node3_2_2 = Builder.DecisionNode(4, operator=Builder.EQ, threshold=1, left=-0.1, right=node3_1)

node3_2_3 = Builder.DecisionNode(4, operator=Builder.EQ, threshold=1, left=-0.5, right=0.1)

node3_3_1 = Builder.DecisionNode(2, operator=Builder.GT, threshold=1, left=node3_2_1, right=node3_2_2)

node3_3_2 = Builder.DecisionNode(2, operator=Builder.GT, threshold=1, left=-0.4, right=node3_2_3)

node3_4 = Builder.DecisionNode(3, operator=Builder.EQ, threshold=1, left=node3_3_1, right=node3_3_2)

tree3 = Builder.DecisionTree(4, node3_4)

BT = Builder.BoostedTrees([tree1, tree2, tree3], n_classes=2)

We compute the direct reason for this instance:

explainer = Explainer.initialize(BT)

explainer.set_instance((4,3,1,1))

direct = explainer.direct_reason()

print("instance: (4,3,2,1)")

print("binary_representation:", explainer.binary_representation)

print("target_prediction:", explainer.target_prediction)

print("direct:", direct)

print("to_features:", explainer.to_features(direct))

instance: (4,3,2,1)

binary_representation: (1, 2, 3, 4)

target_prediction: 1

direct: (1, 2, 3, 4)

to_features: ('f1 > 2', 'f2 > 1', 'f3 == 1', 'f4 == 1')

As you can see, in this case, the direct reason coincides with the full instance.

Example from a Real Dataset

For this example, we take the compas.csv dataset. We create one model using the hold-out approach (by default, the test size is set to 30%) and select a well-classified instance.

from pyxai import Learning, Explainer

learner = Learning.Xgboost("../../../dataset/compas.csv", learner_type=Learning.CLASSIFICATION)

model = learner.evaluate(method=Learning.HOLD_OUT, output=Learning.BT)

instance, prediction = learner.get_instances(model, n=1, correct=True)

data:

Number_of_Priors score_factor Age_Above_FourtyFive

0 0 0 1 \

1 0 0 0

2 4 0 0

3 0 0 0

4 14 1 0

... ... ... ...

6167 0 1 0

6168 0 0 0

6169 0 0 1

6170 3 0 0

6171 2 0 0

Age_Below_TwentyFive African_American Asian Hispanic

0 0 0 0 0 \

1 0 1 0 0

2 1 1 0 0

3 0 0 0 0

4 0 0 0 0

... ... ... ... ...

6167 1 1 0 0

6168 1 1 0 0

6169 0 0 0 0

6170 0 1 0 0

6171 1 0 0 1

Native_American Other Female Misdemeanor Two_yr_Recidivism

0 0 1 0 0 0

1 0 0 0 0 1

2 0 0 0 0 1

3 0 1 0 1 0

4 0 0 0 0 1

... ... ... ... ... ...

6167 0 0 0 0 0

6168 0 0 0 0 0

6169 0 1 0 0 0

6170 0 0 1 1 0

6171 0 0 1 0 1

[6172 rows x 12 columns]

-------------- Information ---------------

Dataset name: ../../../dataset/compas.csv

nFeatures (nAttributes, with the labels): 12

nInstances (nObservations): 6172

nLabels: 2

--------------- Evaluation ---------------

method: HoldOut

output: BT

learner_type: Classification

learner_options: {'seed': 0, 'max_depth': None, 'eval_metric': 'mlogloss'}

--------- Evaluation Information ---------

For the evaluation number 0:

metrics:

accuracy: 66.73866090712744

nTraining instances: 4320

nTest instances: 1852

--------------- Explainer ----------------

For the evaluation number 0:

**Boosted Tree model**

NClasses: 2

nTrees: 100

nVariables: 42

--------------- Instances ----------------

number of instances selected: 1

----------------------------------------------

Finally, we display the direct reason for this instance:

explainer = Explainer.initialize(model, instance)

print("instance:", instance)

print("prediction:", prediction)

print()

direct_reason = explainer.direct_reason()

print("len binary representation:", len(explainer.binary_representation))

print("len direct:", len(direct_reason))

print("is_reason:", explainer.is_reason(direct_reason))

print("to_features:", explainer.to_features(direct_reason))

instance: [0 0 1 0 0 0 0 0 1 0 0]

prediction: 0

len binary representation: 42

len direct: 38

is_reason: True

to_features: ('Number_of_Priors < 0.5', 'score_factor < 0.5', 'Age_Above_FourtyFive >= 0.5', 'Age_Below_TwentyFive < 0.5', 'African_American < 0.5', 'Asian < 0.5', 'Hispanic < 0.5', 'Native_American < 0.5', 'Other >= 0.5', 'Female < 0.5', 'Misdemeanor < 0.5')

We can remark that this direct reason contains 38 binary variables of the implicant out of 42. This reason explains why the model predicts $0$ for this instance. But this is probably not the most compact reason for this instance, we invite you to look at the other types of reasons presented on the Explanations Computation page.