Decision Tree Explanations

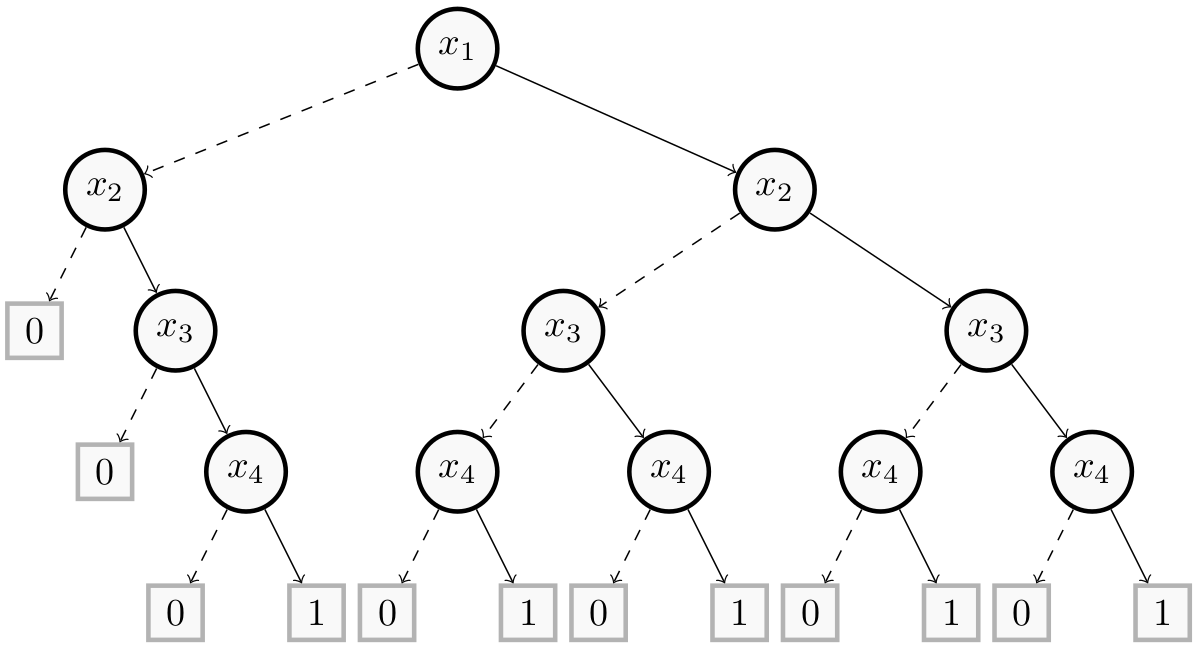

We consider a finite set ${A_1, \ldots, A_n}$ of attributes (aka features). A decision tree over ${A_1, \ldots, A_n}$ is a binary tree $T$, each of its internal nodes being labeled with a Boolean condition on an attribute from ${A_1, \ldots, A_n}$, and leaves are labeled by numbers representing classes. The conditions are typically of the form “<id_feature> <operator> <threshold> ?” (such as “$x_4 \ge 0.5$ ?”).

To make some figures easier to understand, conditions on binary features in trees are sometimes represented only using features.

The prediction $f(x)$ of $T$ for an input instance $x$ is given by the label of the leaf reached from the root as follows: at each node go to the left or to the right child depending on whether or not the condition labelling the node is satisfied by $x$.

For the decision tree classifiers, PyXAI supports both binary and multi-class classification explanations. In the first case, the leaves are labeled by 0 or 1. In the second case, the leaves are labeled by integers representing classes.