Rectification for Decision Trees

Further information on the rectification method for Decision Trees is available in the paper Rectifying Binary Classifiers.

Example from a Hand-Crafted Tree

To illustrate this, we take an example of a credit scoring scenario.

Each customer is characterized by:

- an annual income $I$ (in k\$),

- the fact of having already reimbursed a previous loan ($R$),

- and, whether or not, the customer has a permanent position ($PP$).

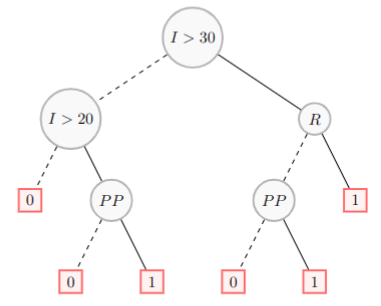

A decision tree T representing the model is described in the following figure. The Boolean conditions used in $T$ are $I > 30$, $I > 20$, $R$, and $PP$.

We start by building the decision tree:

from pyxai import Builder, Explainer

node_L_1 = Builder.DecisionNode(3, operator=Builder.EQ, threshold=1, left=0, right=1)

node_L_2 = Builder.DecisionNode(1, operator=Builder.GT, threshold=20, left=0, right=node_L_1)

node_R_1 = Builder.DecisionNode(3, operator=Builder.EQ, threshold=1, left=0, right=1)

node_R_2 = Builder.DecisionNode(2, operator=Builder.EQ, threshold=1, left=node_R_1, right=1)

root = Builder.DecisionNode(1, operator=Builder.GT, threshold=30, left=node_L_2, right=node_R_2)

tree = Builder.DecisionTree(3, root, feature_names=["I", "PP", "R"])

Consider the instance $x = (I = 25, R = 1, PP = 1)$ corresponding to a customer applying for a loan. We initialize the explainer with this instance and the associated theory (see the Theories page for more information).

loan_types = {

"numerical": ["I"],

"binary": ["PP", "R"],

}

explainer = Explainer.initialize(tree, instance=(25, 1, 1), features_type=loan_types)

print("binary representation: ", explainer.binary_representation)

print("target_prediction:", explainer.target_prediction)

print("to_features:", explainer.to_features(explainer.binary_representation, eliminate_redundant_features=False))

--------- Theory Feature Types -----------

Before the one-hot encoding of categorical features:

Numerical features: 1

Categorical features: 0

Binary features: 2

Number of features: 3

Characteristics of categorical features: {}

Number of used features in the model (before the encoding of categorical features): 3

Number of used features in the model (after the encoding of categorical features): 3

----------------------------------------------

binary representation: (-1, 2, 3, 4)

target_prediction: 1

to_features: ('I <= 30', 'I > 20', 'PP == 1', 'R == 1')

The user (a bank employee) disagrees with this prediction (the loan acceptance). For him/her, the following classification rule must be obeyed: whenever the annual income of the client is lower than 30, the demand should be rejected. To do this rectification, we use the rectify() method of the explainer object. More information about this method are available on the Rectification page.

rectified_model = explainer.rectify(conditions=(-1, ), label=0)

# Keep in mind that the condition (-1, ) means that 'I <= 30'.

print("target_prediction:", explainer.target_prediction)

-------------- Rectification information:

Classification Rule - Number of nodes: 3

Model - Number of nodes: 11

Model - Number of nodes (after rectification): 17

Model - Number of nodes (after simplification using the theory): 11

Model - Number of nodes (after elimination of redundant nodes): 7

--------------

target_prediction: 0

Here is the model without any simplification:

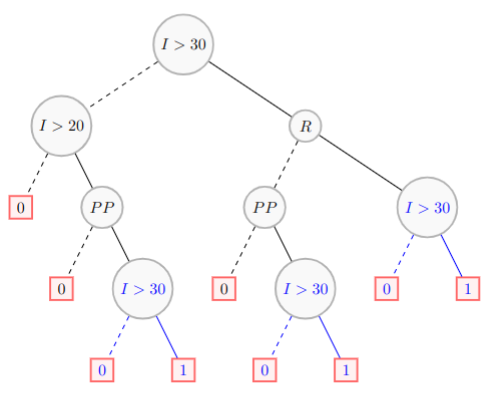

Here is the model once simplified:

We can check that the instance is now correctly classified:

print("target_prediction:", rectified_model.predict_instance((25, 1, 1)))

target_prediction: 0

Example from a Real Dataset

For this example, we take the compas.csv dataset. We create a model using the hold-out approach (by default, the test size is set to 30%) and select a miss-classified instance.

from pyxai import Learning, Explainer

learner = Learning.Scikitlearn("../dataset/compas.csv", learner_type=Learning.CLASSIFICATION)

model = learner.evaluate(method=Learning.HOLD_OUT, output=Learning.DT)

dict_information = learner.get_instances(model, n=1, indexes=Learning.TEST, correct=False, details=True)

instance = dict_information["instance"]

label = dict_information["label"]

prediction = dict_information["prediction"]

print("prediction:", prediction)

data:

Number_of_Priors score_factor Age_Above_FourtyFive \

0 0 0 1

1 0 0 0

2 4 0 0

3 0 0 0

4 14 1 0

... ... ... ...

6167 0 1 0

6168 0 0 0

6169 0 0 1

6170 3 0 0

6171 2 0 0

Age_Below_TwentyFive African_American Asian Hispanic \

0 0 0 0 0

1 0 1 0 0

2 1 1 0 0

3 0 0 0 0

4 0 0 0 0

... ... ... ... ...

6167 1 1 0 0

6168 1 1 0 0

6169 0 0 0 0

6170 0 1 0 0

6171 1 0 0 1

Native_American Other Female Misdemeanor Two_yr_Recidivism

0 0 1 0 0 0

1 0 0 0 0 1

2 0 0 0 0 1

3 0 1 0 1 0

4 0 0 0 0 1

... ... ... ... ... ...

6167 0 0 0 0 0

6168 0 0 0 0 0

6169 0 1 0 0 0

6170 0 0 1 1 0

6171 0 0 1 0 1

[6172 rows x 12 columns]

-------------- Information ---------------

Dataset name: ../dataset/compas.csv

nFeatures (nAttributes, with the labels): 12

nInstances (nObservations): 6172

nLabels: 2

--------------- Evaluation ---------------

method: HoldOut

output: DT

learner_type: Classification

learner_options: {'max_depth': None, 'random_state': 0}

--------- Evaluation Information ---------

For the evaluation number 0:

metrics:

accuracy: 65.33477321814254

precision: 65.20423600605145

recall: 51.126927639383155

f1_score: 57.31382978723405

specificity: 77.20515361744302

true_positive: 431

true_negative: 779

false_positive: 230

false_negative: 412

sklearn_confusion_matrix: [[779, 230], [412, 431]]

nTraining instances: 4320

nTest instances: 1852

--------------- Explainer ----------------

For the evaluation number 0:

**Decision Tree Model**

nFeatures: 11

nNodes: 539

nVariables: 46

--------------- Instances ----------------

number of instances selected: 1

----------------------------------------------

prediction: 0

We activate the explainer with the associated theory and the selected instance:

compas_types = {

"numerical": ["Number_of_Priors"],

"binary": ["Misdemeanor", "score_factor", "Female"],

"categorical": {"{African_American,Asian,Hispanic,Native_American,Other}": ["African_American", "Asian", "Hispanic", "Native_American", "Other"],

"Age*": ["Above_FourtyFive", "Below_TwentyFive"]}

}

explainer = Explainer.initialize(model, instance=instance, features_type=compas_types)

--------- Theory Feature Types -----------

Before the one-hot encoding of categorical features:

Numerical features: 1

Categorical features: 2

Binary features: 3

Number of features: 6

Characteristics of categorical features: {'African_American': ['{African_American,Asian,Hispanic,Native_American,Other}', 'African_American', ['African_American', 'Asian', 'Hispanic', 'Native_American', 'Other']], 'Asian': ['{African_American,Asian,Hispanic,Native_American,Other}', 'Asian', ['African_American', 'Asian', 'Hispanic', 'Native_American', 'Other']], 'Hispanic': ['{African_American,Asian,Hispanic,Native_American,Other}', 'Hispanic', ['African_American', 'Asian', 'Hispanic', 'Native_American', 'Other']], 'Native_American': ['{African_American,Asian,Hispanic,Native_American,Other}', 'Native_American', ['African_American', 'Asian', 'Hispanic', 'Native_American', 'Other']], 'Other': ['{African_American,Asian,Hispanic,Native_American,Other}', 'Other', ['African_American', 'Asian', 'Hispanic', 'Native_American', 'Other']], 'Age_Above_FourtyFive': ['Age', 'Above_FourtyFive', ['Above_FourtyFive', 'Below_TwentyFive']], 'Age_Below_TwentyFive': ['Age', 'Below_TwentyFive', ['Above_FourtyFive', 'Below_TwentyFive']]}

Number of used features in the model (before the encoding of categorical features): 6

Number of used features in the model (after the encoding of categorical features): 11

----------------------------------------------

We compute why the model predicts 0 for this instance:

reason = explainer.sufficient_reason(n=1)

print("explanation:", reason)

print("to_features:", explainer.to_features(reason))

explanation: (-1, -2, -3, -4)

to_features: ('Number_of_Priors <= 0.5', 'score_factor = 0', 'Age != Below_TwentyFive')

Suppose that the user knows that every instance covered by the explanation (-1, -2, -3, -4) should be classified as a positive instance. The model must be rectified by the corresponding classification rule. Once the model has been corrected, the instance is classified as expected by the user:

model = explainer.rectify(conditions=reason, label=1)

print("new prediction:", model.predict_instance(instance))

-------------- Rectification information:

Classification Rule - Number of nodes: 9

Model - Number of nodes: 1079

Model - Number of nodes (after rectification): 3559

Model - Number of nodes (after simplification using the theory): 1079

Model - Number of nodes (after elimination of redundant nodes): 619

--------------

new prediction: 1