Building Decision Trees

This page explains how to build a Decision Tree with tree elements (nodes and leaves). To illustrate it, we take an example from the On the Explanatory Power of Decision Trees paper for recognizing Cattleya orchids.

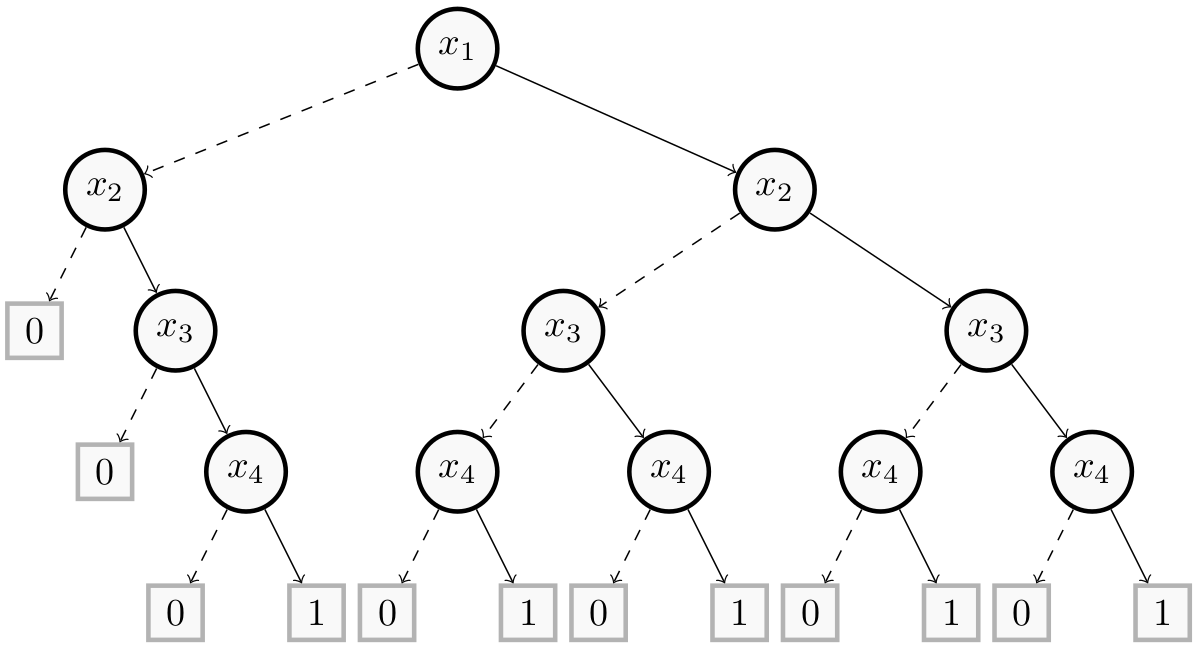

The decision tree represented by this figure separates Cattleya orchids from other orchids using the following features:

- $x_1$: has fragrant flowers.

- $x_2$: has one or two leaves.

- $x_3$: has large flowers.

- $x_4$: is sympodial.

When a leaf is equal to $1$, the instance is classified as a Cattleya orchid, otherwise it is considered as being from another species.

Building the Model

First, we need to import the necessary modules. Let us recall that the builder module contains methods to build this Decision Tree while the explainer module provides methods to explain it.

from pyxai import Builder, Explainer

Next, we build the tree in a bottom up way, that is, from the leaves to the root. So we start with $x_4$ nodes.

node_x4_1 = Builder.DecisionNode(4, left=0, right=1)

node_x4_2 = Builder.DecisionNode(4, left=0, right=1)

node_x4_3 = Builder.DecisionNode(4, left=0, right=1)

node_x4_4 = Builder.DecisionNode(4, left=0, right=1)

node_x4_5 = Builder.DecisionNode(4, left=0, right=1)

The DecisionNode class takes as parameters, respectively, the identifier of the feature, then the left and right values or children nodes.

In this example, as each feature is binary (i.e. it takes for value either 0 or 1), we do not include in the

Builder.DecisionNodeclass the operator and the threshold parameters. Thus, the values of these parameters are those by default (respectively, OperatorCondition.GE and $0.5$). This gives us conditions for each node of the form “$x_4 \ge 0.5$ ?”. In the Boosted Trees page, more complex conditions are created.

Next, we construct the remaining nodes:

node_x3_1 = Builder.DecisionNode(3, left=0, right=node_x4_1)

node_x3_2 = Builder.DecisionNode(3, left=node_x4_2, right=node_x4_3)

node_x3_3 = Builder.DecisionNode(3, left=node_x4_4, right=node_x4_5)

node_x2_1 = Builder.DecisionNode(2, left=0, right=node_x3_1)

node_x2_2 = Builder.DecisionNode(2, left=node_x3_2, right=node_x3_3)

node_x1_1 = Builder.DecisionNode(1, left=node_x2_1, right=node_x2_2)

We can now define the Decision Tree thanks to the DecisionTree class:

tree = Builder.DecisionTree(4, node_x1_1, force_features_equal_to_binaries=True)

This class takes as parameters the numbers of features, the root node and the keyword argument force_features_equal_to_binaries=True.

The parameter

force_features_equal_to_binariesallows one to make binary variables equal to feature identifiers. These binary variables are used to represent explanations and their values and signs indicate whether the condition at each node is satisfied or not. By default, these binary variables have random values depending on the order with the tree is traversed. Setting the parameterforce_features_equal_to_binariestoTrueensures that the binary variables no longer receive random values. This allows us to have explanations that match the features, without having to use the to_features method. However, this functionality cannot be used with all models because it is not compatible when nodes have different conditions on the same feature. It assumes that the features are the conditions.

More details about the DecisionNode and DecisionTree classes is given in the Building Models page.

Explaining the Model

We can now compute explanations. Let us start with the instance (1,1,1,1):

print("instance = (1,1,1,1):")

explainer = Explainer.initialize(tree, instance=(1,1,1,1))

print("target_prediction:", explainer.target_prediction)

direct = explainer.direct_reason()

print("direct:", direct)

assert direct == (1, 2, 3, 4), "The direct reason is not good !"

sufficient_reasons = explainer.sufficient_reason(n=Explainer.ALL)

print("sufficient_reasons:", sufficient_reasons)

assert sufficient_reasons == ((1, 4), (2, 3, 4)), "The sufficient reasons are not good!"

for sufficient in sufficient_reasons:

assert explainer.is_sufficient_reason(sufficient), "This is not a sufficient reason!"

minimals = explainer.minimal_sufficient_reason()

print("Minimal sufficient reasons:", minimals)

assert minimals == (1, 4), "The minimal sufficient reasons are not good!"

contrastives = explainer.contrastive_reason(n=Explainer.ALL)

print("Contrastives:", contrastives)

for contrastive in contrastives:

assert explainer.is_contrastive_reason(contrastive), "This is not a contrastive reason!"

instance = (1,1,1,1):

target_prediction: 1

direct: (1, 2, 3, 4)

sufficient_reasons: ((1, 4), (2, 3, 4))

Minimal sufficient reasons: (1, 4)

Contrastives: ((4,), (1, 2), (1, 3))

And now with the instance (0,0,0,0):

print("\ninstance = (0,0,0,0):")

explainer.set_instance((0,0,0,0))

print("target_prediction:", explainer.target_prediction)

direct = explainer.direct_reason()

print("direct:", direct)

assert direct == (-1, -2), "The direct reason is not good !"

sufficient_reasons = explainer.sufficient_reason(n=Explainer.ALL)

print("sufficient_reasons:", sufficient_reasons)

assert sufficient_reasons == ((-4,), (-1, -2), (-1, -3)), "The sufficient reasons are not good !"

page

for sufficient in sufficient_reasons:

assert explainer.is_sufficient_reason(sufficient), "This is have to be a sufficient reason !"

minimals = explainer.minimal_sufficient_reason(n=1)

print("Minimal sufficient reasons:", minimals)

assert minimals == (-4,), "The minimal sufficient reasons are not good !"

instance = (0,0,0,0):

target_prediction: 0

direct: (-1, -2)

sufficient_reasons: ((-4,), (-1, -2), (-1, -3))

Minimal sufficient reasons: (-4,)

Details on explanations are given in the Explanations Computation page.